Humans are getting stupider because of AI

& how to become more intelligent than 99% of AI users

The internet went insane when MIT released the study called Your Brain on ChatGPT.

In short, it confirmed what all the anti-AI people were thinking.

Using AI makes you dumb.

The study found that those who relied on ChatGPT showed the weakest brain connectivity and lowest memory retention, with lingering effects even after discontinuing AI use.

Another study by Microsoft and Carnegie Mellon found that overreliance on AI often led to diminished analytical engagement and cognitive atrophy.

As always, you give an inch and they'll take a mile. You give them a study, they'll read the headline and lock in their answer - closing themselves off to further learning.

AI religions are very real.

Some believe AI is God while others believe it is the work of the devil.

There are communities that reinforce beliefs and identity. People maintain beliefs about AI despite evidence and experience. They regurgitate what they hear from prophecies and prophets.

As you'll find, thinking for yourself is a crucial aspect of using AI, so if you can't do that due to being indoctrinated into the anti-AI religion, you won't benefit much from this letter. Please see your way out.

Here's what we need to talk about:

AI only makes you dumb if you are already dumb.

If you are smart, AI has the ability to make you much smarter.

If you haven't used AI to the point of being able to form your own opinion, that is a crystal clear sign of low consciousness. In other words, you are largely unaware that calling AI users dumb is hypocritical, and you may as well be talking to a mirror.

AI is the great amplifier.

Here's how to become more intelligent than 99% of people using AI.

By the end, you'll know how to avoid melting your brain, why AI makes 90% of people dumb, and 3 ways to use AI to become smarter.

The Traits Of Highly Unintelligent People

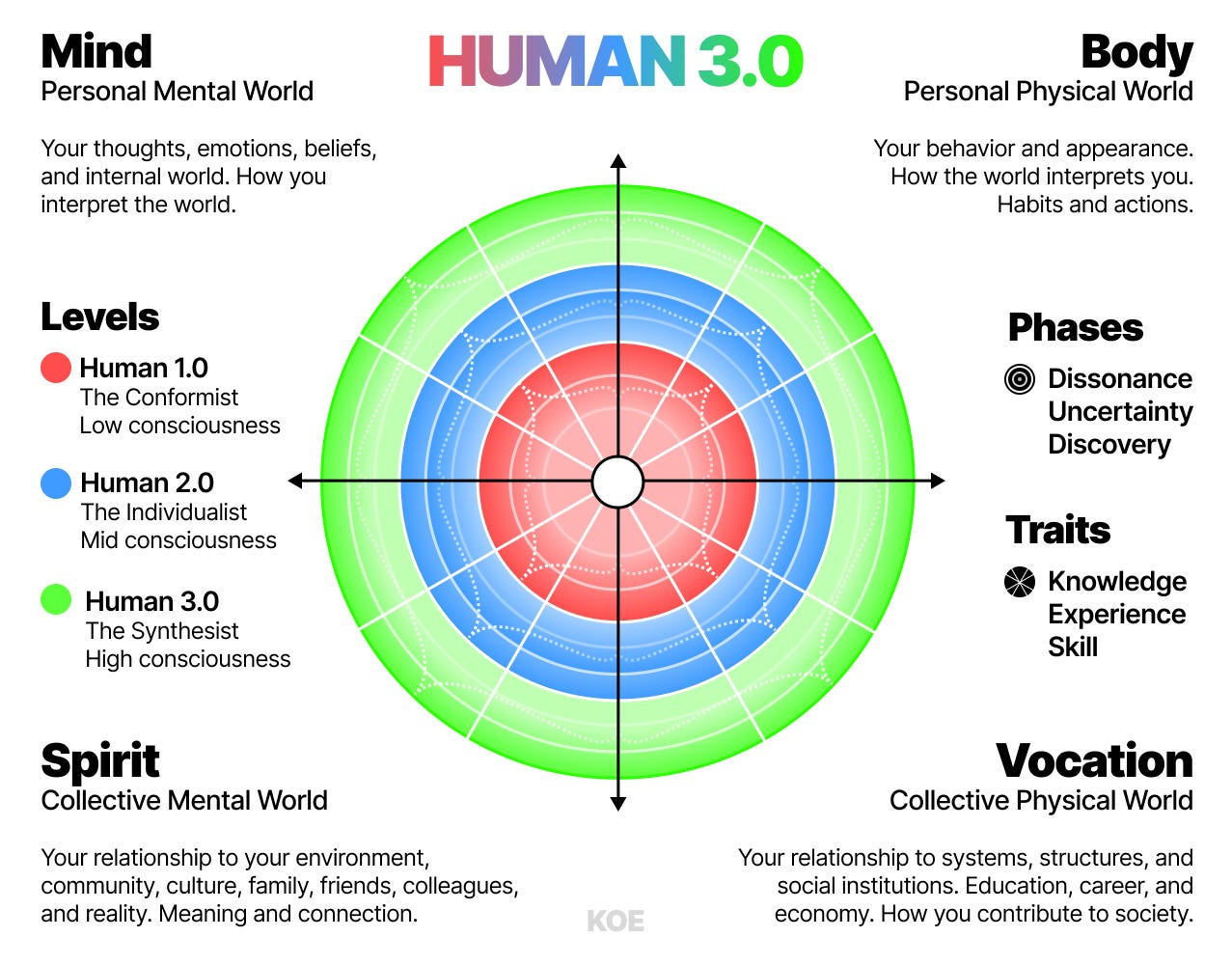

As we discussed in HUMAN 3.0, level 1 (red) thinking is characterized by:

Conformity to authority or tradition

Narrow-mindedness or black and white thinking

Believing there is only "one right way" which often stems from social conditioning

When low-consciousness people use AI (or demonize AI), it amplifies what's already there.

These are the types of people who try to have AI create everything for them. They demand it to write their essays, write viral content, answer questions without earning the answer, and tell them how to live their life.

The glaring problem here is that low-consciousness individuals are also low-agency. They are assigned goals by their parents, teachers, and culture. They don't care about these goals. School, job, retire. They aren't meaningful. So it's obvious why these people try to shortcut the process. Learning isn't pleasurable to them, it's torture. Effort isn't rewarding, so they put in as little of it as they have to to scrape by. They take any shortcut available to them because they simply don't care about mastery in a domain they did not choose by their own discovery.

So, I agree that AI is making people dumb, but it's not making everyone dumb, and if that doesn't make you excited about how such a powerful tool can change your life, I don't know what to tell you.

High-consciousness people who think at Level 3, on the other hand, leverage AI so that they can focus more on their self-generated meaningful goals.

They outsource tasks that free up cognitive capacity so they can think more, from a higher level. They simulate their own mental processes with elegant prompt engineering. They hold a firm grip on their craft and minimize how much AI dictates their direction. I will never give up my writing, so I need to pay mind to how much AI reaches into it. But that doesn't mean I don't use AI for things like research and pattern matching, it means that my writing process has changed to benefit from a new tool.

Doing this well requires a big shift in how you live your life.

We've already been doing this for thousands of years

Throughout history, it has been a common practice for people to "check" their thinking against what other smart people believe to ensure that it holds weight.

When you're unsure about what to do, you read books and acquire knowledge.

When you seek advice, you look up an expert opinion or talk to a smart friend.

When you are unconfident, you search for evidence that encourages you to act.

(But that doesn't mean any of that is true or the best way to go about it).

This is nothing new.

The anti-AI people do it every day.

And newsflash, AI has immediate access to almost any knowledge or opinion you would ever want to find. That's both dangerous and powerful.

The Greeks, as an example, had various competing philosophical schools (like Plato's academy or Aristotle's Lyceum) that would attract students based on reputation and philosophical alignment. People gravitated toward teachers whose ideas resonated with them or whose lifestyle they admired. They pursued teachers who could best challenge their thinking in alignment with their own goals.

Socrates himself didn't run a school, but he would engage with students who regularly sought him out for these types of conversations.

The modern manifestation of this is the creator economy. There are more schools of thought than ever, and you can align your goals with incredibly smart people. But many underdeveloped people can be loud and distracting simply because the barrier of entry is an internet connection.

There is one key distinction here.

These teachers had a life that backed up their ideas. They had proven themselves in multiple domains, from being a wise teacher and a great warrior. They could endure hardship.

AI can't do that, and since most AI tools are tuned to be a consumer product marketed toward the general public, it can absolutely make you stupid. On the other hand, AI can mimic reasoning and emotion quite well, because examples of those are abundant.

So, how do you use AI in a way that makes you smarter?

Well, you do what smart people do whether AI exists or not:

You understand that the first teaching in any form of education should be to question the teachings. You hold every idea in the realm of possibility and test it against reality before accepting it as truth.

You don't trust an idea or belief just because an authority figure said it.

You don't take Socrates' advice just because he's Socrates. Even Socrates would tell you that. Hell, Socrates was skeptical of books because he believed you should learn to reason on your own.

You don't listen to your parents, who probably don't have the life you want to live, when it comes to how to direct your life. You don't take politicians', public intellectuals', or any flawed human's word as law. And you especially don't latch onto a belief that AI is bad and allow confirmation bias to reinforce that belief until you become as dumb as a rock.

Outsourcing your thinking to some other perceived authority figure (when authority is a concept for the incompetent and unconfident) has always been a problem.

You should never surrender your agency – or your responsiblity to think – to interpreted human authority.

You must earn your consciousness.

You must discover the insight yourself through the practical application of knowledge in the pursuit of a self-generated and preferably meaningful goal.

And that comes through approaching AI from the lens of a skeptic, experimenting before accepting, and being willing to shed beliefs that no longer serve you.

You can, and should, seek others' knowledge to improve your decision-making (every generation starts from a new baseline of progress thanks to knowledge being stored and passed down), but that doesn't mean you latch onto one belief and prevent your mind from further growth, because truth is contextual to your values and goals, and those almost never align perfectly with someone else.

The good thing is, if you learn how to use AI, you can amplify this process more than has ever been possible.

How AI makes smart people smarter

AI isn't the variable that makes people dumb.

YOU are the variable. Your level of consciousness. AI doesn't just force you into becoming dumb the second you use it. That's a dumb way to look at it. Personal responsibility is more important than ever.

Level 1 thinking is shortcut-oriented ("Do this for me") because they haven't rejected their conditioning

Level 2 thinking is status-oriented ("Help me make money, be productive, etc") because they haven't found their craft or calling

Level 3 thinking is mastery-oriented ("Help me maximize my potential") because they deeply care about their life's work

The core difference between level 1 and level 3 is the pursuit of truth.

Level 1 places the values of their birthview (their worldview assigned/conditioned at birth) above truth.

Level 2 places success and status above truth.

This is obviously dangerous, because if any value is placed above truth – like love, money, academia, or even family – it becomes corrupt because it is built on lies.

Religion above truth leads to dogmatic thinking from a lack of questioning.

Money above truth leads to tech CEOs who know their algorithms rot the brains of children for the sake a maintaining profit margins.

Love above truth leads to a new-age woman chasing men who give her the strongest feelings, creates fantasies about these men because she just trusts love, those men cheat on her but she gaslights herself rather than faces reality (truth), then gets lost in a fake spirituality, goes off to Burning Man, does psychedelics and gets one-shotted, then joins a cult because they love bomb yet exploit her. (Here's a great video on truth.)

Level 3 realizes that the pursuit of anything but truth leads to a horrible downward spiral into deeper layers of self-deception.

With AI, all of these false realities across any domain, topic, interest, or situation become possible. That's horrifying. Again, AI is the great amplifier, and if you are living in a world of lies (see: the pure anti-AI crowd, and the pure pro-AI crowd) AI will only speed up your descent into hell.

That said, many smart people are using AI to do things they've never done before.

They're reading more, because AI can spew out mostly accurate information. You can create your own book as if it were a choose-your-own-adventure game driven by curiosity on a specific topic you want to explore.

My friend has always wanted to write a book, but never started because it seemed like such a daunting task.

Then, he started dumping ideas into ChatGPT and it all started to make sense:

He didn't want AI to write for him

He also didn't want generic book writing guidance - so he knew he couldn't just send vague prompts and expect anything worthwhile

He took various Brandon Sanderson lectures and loaded them into context

Now, he could consult with one of the greatest fiction writers and learn how to write a book as he wrote – no more tutorial hell or mental masturbation

He could store all of his ideas and retrieve any of those ideas just by asking, because there has never been a tool that can manage knowledge better than AI

If he maintained a skeptical lens, this new tool would allow him to create a much better book than he would have been able to do on his own

AND, it would at least get him started, leading to knowledge, skill, experience, and eventually falling in love with a new hobby that could turn into a profession

The reality is, AI allows people focus more on what matters. Their vision, mission, and goals... if they have them.

When it comes time for my friend to publish and promote his book without a publisher or life savings to invest in editors, cover artists, and distribution, he can leverage AI to do more as one person. He actually has the potential to do this now. This was not possible 20 years ago. Technology has its obvious downsides, but enabling you to pursue your dreams is not one of them.

Ways I've massively benefited from using AI

For the sake of brevity, I'm going to rapid-fire better ways to use AI.

Most people have not used AI beyond the consumer level. They type in vague prompts and treat it as a search engine, or a boyfriend for some odd reason. Feelings above truth, I guess.

1) Thought partner

AI is a programming language for language itself.

Meaning, you can give it hyper-specific instructions to follow.

To create a thought partner that helps rather than hurts my thinking, I would not just say "be my thought partner" like most people would. Instead, I would prompt it to:

Ask for a topic I want to explore with a few of my current thoughts

Look for patterns between my thoughts and other methods

Search for counterintuitive truths and opposing viewpoints

Probe me to articulate concepts that haven't been crystallized

Challenge generic claims until specific insights emerge

Don't compliment me, just observe, challenge, or dig deeper

Nowhere in that process does AI give me an answer or suggest a solution, it encourages me to reason on my own.

In other words, I can simulate what a smart and skeptical person would do and improve their "brain" as I go. This is the art of prompt engineering.

I have an example of this here.

2) Intellectual architecture & synthesis

If you don't believe AI is smart, then confine it to the context of smart people. Don't give away control to the model that has the biases of the megacorp that created it.

First, I can upload books, YouTube videos, and lectures of my favorite thinkers.

Or, since AI has access to most of it, I can simply say the following:

Create a "master guide" of Daniel Schmachtenberger's worldview. All of his core principles, applications, historical interpretations, and anything else that would allow an AI to understand all key parts of how he thinks. This should be as thorough as possible.

Personally, I have various smart people who have shaped my worldview over time. I can do the same thing for each one of them. Then I can:

Load them all into a new chat

Ask AI to spot patterns between them all

Create a synthesis of their worldviews and how they interact, agree, and disagree

Then, I can converse with any of their individual worldviews or all of them to identify biases and gaps in my own worldview.

There is merit in reading all of their books, mapping everything out in a cluttered note-taking software, and spending 10 years wrestling with the ideas, but humans can only remember so much. We have very limited cognitive (and labor) capacity. That's why we invent technology. To increase our potential. To allow us to abstract out to a more human layer by having machines do what we can't.

In fact, I have read their books. That's why this is powerful. I have the knowledge, but now I can shine a light on it from all angles.

Beyond that, and after doing this with various thinkers I admire, I finally understand some of their most complex concepts.

So, if reading and wrestling with ideas is for the purpose of understanding, and that can be accomplished faster by tailoring information to the way you learn best, then I see that as a great thing.

3) Externalizing your creative processes

As much as we love to believe we are super creative and artistic, most of what we do can be seen as neural pathways in our brains that have become more efficient with time.

This is what "skills" are.

If you can meticulously reverse engineer how you do what you do (by making the unconscious conscious), you can pass off that cognitive load to AI, allowing you to move up to a new level of thinking.

In other words, in order to do something well with AI, you still have to know how to do it.

On the other hand, if I love writing but hate marketing, I can find an expert in marketing, reverse engineer their process with AI, turn it into a prompt, and now I have an expert-level employee that can do my marketing for me. I created a guide on this.

There are 100 ways to skin a cat, create a great YouTube video, or write a great book.

There are thousands of tutorials created by knowledgeable people to accomplish a specific task.

If you simply ask AI to "create a YouTube video," it will probably pull from a popular article on how to do so, leading to a horrible video. Since you don't understand both how it was made or why people didn't watch it, you have no idea how to make it better.

But, if I've already put in the effort to learn, practice, and produce a great YouTube video, I can:

Turn my exact thought process for generating titles and thumbnails into a detailed prompt

Document what makes my own video style do well (that is different from everyone else's) and have AI grade my script for idea density, novel perspectives, and impact of the takeaways

Research books, videos, and content that I've already read to extract exactly what I need for the video, saving me hours of time spent note-taking and digging through piles of information when I already know what I want

Now, I can simply focus more on doing what I love to do, creating the videos. And with the power of AI, I can make them even higher quality than they were before.

These were only a few examples of how to use AI in a smarter way, but there are so much more.

You can apply these to any domain.

I’ll save more examples for another letter, this one is getting long.

– Dan

If you want to continue reading, here are relevant letters:

ai doesn’t make you dumb.

it just exposes who was never thinking for themselves in the first place.

— author

All very factual. But from a more humanitarian standpoint, this kind of technology simply should not be released to the public. Like social media, they are going to destroy themselves due to lack of understanding.

Also, from a broader, less individual perspective, doesn't AI currently consume 17 BILLION galleons of water yearly? We're going to regret that. It seems like an irrelevant solution to a non-existent problem.

We are advancing our sophisticated worldviews and philosophies, increasing 'productivity'. While doing so, the world itself will die.